Six Principles for Scientists Seeking Hiring, Promotion, and Tenure

Academic medical institutions, when hiring or promoting faculty who they hope will move science forward in impactful ways, are confronted with a familiar problem: it is difficult to predict whose scientific contribution will be greatest, or meet an institution’s values and standards. Some aspects of a scientist’s work are easily determined and quantified, like the number of published papers. However, publication volume does not measure “quality”, if by quality we mean substantive, impactful science that addresses valuable questions and is reliable enough to build upon.

This article by Florian Naudet, John P. A. Ioannidis, Frank Miedema, Ioana A. Cristea, Steven N. Goodman and David Moher originally appeared on the LSE Impact of Social Sciences blog as “Six principles for assessing scientists for hiring, promotion, and tenure” and is reposted under the Creative Commons license (CC BY 3.0).

Recognizing this, many institutions augment publication numbers with measures they believe better capture the scientific community’s judgement of research value. The journal impact factor (JIF) is perhaps the best known and most widely used of such metrics. The JIF in a given year is the average number of citations to research articles in that journal over the preceding two years. Like publication numbers, it is easy to measure but may fail to capture what an institution values. For instance, in Rennes 1 University, the faculty of medicine’s scientific assessment committee evaluates candidates with a “mean” impact factor (the mean of JIF of all published papers) and hiring of faculty requires publications in journals with the highest JIF. They attempt to make this more granular by also using a score that attempts to correct the JIF for research field and author rank. (This score, “Système d’Interrogation, de Gestion et d’Analyse des Publications Scientifiques”, SIGAPS, is not publicly available). In China, Qatar, Saudi Arabia, among other countries, scientists receive monetary bonuses for research papers published in high-JIF journals, such as Nature.

To understand the value of a paper requires careful appraisal. Reading a few papers that best characterize the corpus of a scientist’s research offers insights about his/her quality that cannot be captured by JIF metrics. JIF provides information about the citation influence of an entire journal, but it is far less informative for the assessment of an individual publication and even less useful for the assessment of authors of these papers. Journal citation distributions are highly skewed, with a small percentage of papers accounting for a large majority of the total citations, meaning that the large majority of papers even in high JIF journals will have relatively few citations. Furthermore, the phenomenon of publication bias exists at the highest JIF journals, in that statistical significance of results will affect the publication prospects for studies of equal quality. Arguably the most important finding of The Open Science Collaboration’s “reproducibility” project, which chose at random 100 studies from three leading journals in the field, is that with exceedingly modest median sample sizes (medians from 23 to 76), 97% of those studies reported statistically significant results. Clearly, the journals were using significance as a publication criterion, and the authors knew this.

This brings us a critical feature in these evaluation systems that threaten the scientific enterprise, embodied in Goodhart’s Law (i.e. it ceases as a valid measurement when it becomes an optimization target). This happens when the measure can be manipulated by those it is measuring – known as “gaming” – devaluing the measure and producing unintended harmful consequences. In the case of scientific research, these unintended (but predictable) practices include p-hacking, salami science, sloppy methods, selective reporting, low transparency, extolling of spurious results (aka spin and hype), and a wide array of other detrimental research practices that promote publication and careers, but not necessarily reproducible research. In this way, the “flourish or perish” culture defined by these metrics in turn drives the system of career advancement and personal survival in academia that contributes to the reproducibility crisis and sub-optimal quality of the scientific publication record. The problem described above is systemic in nature and not an issue of individual unethical misbehavior.

There is abundant empirical evidence to show that the current internationally dominant incentive and reward system still used by most stakeholders, including major funders, in academia is problematic. Scientists working in fields of research that do not easily yield “high-impact” papers but that have high societal impact and address pressing needs are undervalued. Also, scientists who do meaningful research with limited funds are disadvantaged, when they apply for funding, compared to scientists with more extensive funding track records, a strong predictor of future funding. Indicators used to evaluate research and (teams of) researchers by funders, universities, and institutes in decisions of promotion and tenure should also take societal and broader impact into account.

To address these issues, the Meta-research Innovation Center at Stanford (METRICS) convened a one-day workshop in January, 2017 in Washington DC, to discuss and propose strategies to hire, promote, and tenure scientists. It was comprised of 22 people who represented different stakeholder groups from several countries (deans of medicine, public and foundation funders, health policy experts, sociologists, and individual scientists).

The outcomes of that workshop were summarized in a recent perspective, in which we described an extensive but non-exhaustive list of current proposals aimed at aligning assessments of scientists with desirable scientific behaviors. Some large initiatives are gaining traction. For instance, the San Francisco Declaration on Research Assessment (DORA) has been endorsed by thousands of scientists and hundreds of academic institutions worldwide. It advocates “a pressing need to improve how scientific research is evaluated, and asks scientists, funders, institutions and publishers to forswear using JIFs to judge individual researchers”. Other proposals are still just ideas without any implementation yet.

At the University Medical Centre Utrecht, commitments to societal and clinical impact in collaboration with local and national communities are used as relevant indicators for assessing scientists. UMC Utrecht is collaborating with the Center for Science and Technology Studies Leiden to evaluate the effect of these interventions.

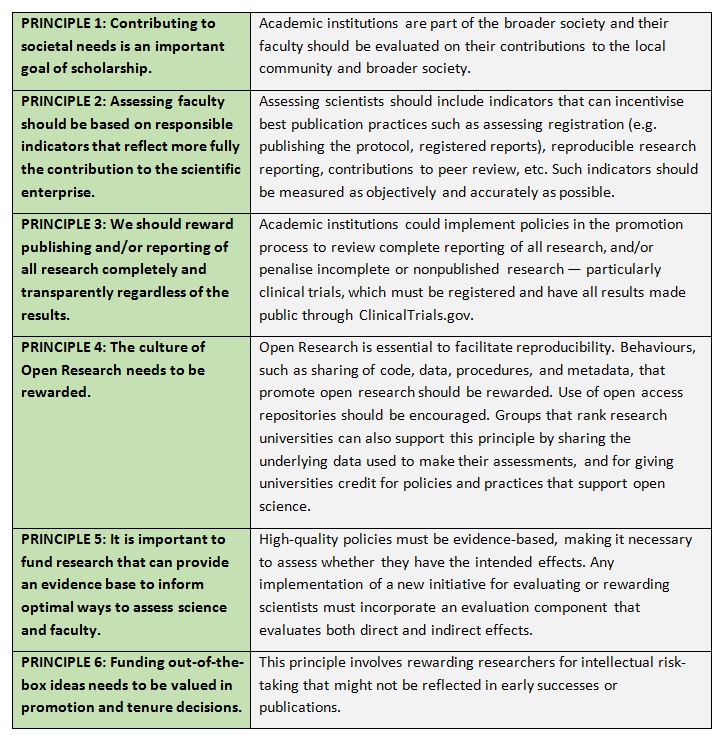

Attendees at this workshop endorsed the following set of principles, summarized in the perspective:

While these principles are not new, there is now a surge of activities preparing to use them in academia. The G7 leaders recently indicated a need for change in how scientists are assessed. Europe might be ahead of the curve in endorsing and implementing changes. The new framework programme Horizon Europe adopts Open Science and Open Innovation as two of its three pillars. This calls for a more inclusive evaluation scheme of research and researchers, compatible with that outlined here. The Commission edited guidance to help EU Member States transition to Open Science (including the concept that data be “FAIR” – Findable, Accessible, Interoperable and Reusable). They are moving towards a structured CV which would include Responsible Indicators for Assessing Scientists (RIAS), and other related information.

Those efforts mainly focus on open science practices. Further work needs to be done by institutions, researchers, and policymakers to achieve Principle 1, how to define a scientist’s contribution to societal needs. This could require universities to amend their internal assessment policies to explicitly incorporate their missions of knowledge dissemination and societal impact, which themselves are often not explicitly articulated. Such mission definition and evaluations should include a broad spectrum of assessors, including the public or their representatives. And any movement in this direction, as with all the initiatives above, will require schema for monitoring of outcomes both positive and negative.

We are at a crucial time in the movement of research reform; a movement that is crossing disciplinary and national borders. There is a window of opportunity now to make changes that were previously thought impossible. It is important that we take full advantage of this opportunity, striking the right balance between promising new approaches and the possible harm in some settings of abandoning current assessment tools, which can serve as a partial buffer against advancement based on personal connections to claimed reputation. We need to make changes with the same care scrupulous standards we apply to science itself.

This blog post is based on the authors’ article, “Assessing scientists for hiring, promotion, and tenure”, published in PLoS Biology (DOI: 10.1371/journal.pbio.2004089). For more reading click on the link above.