How Bibliometrics Incentivize Self-Citation

Over the last two decades national research evaluation systems have been implemented in several European and non-European countries. They often rely on quantitative indicators, based on numbers of papers published and citations received. Advocates of these metrics claim that quantitative measures are more objective than peer-review, that they ensure the accountability of the scientific community to taxpayers, and that they promote a more efficient allocation of public resources. Critics argue that when indicators are used to evaluate performance, they rapidly become targets, i.e. they start to change the behavior of the evaluated researchers, following what is commonly called “Goodhart’s law.” For instance, if productivity is positively rewarded, publishing many articles becomes a target that can also be pursued with opportunistic strategies, such as slicing up the same work into multiple publications.

Italy in the years following the introduction of new research evaluation procedures in 2010 is a spectacular case in point. Since 2010, the Italian system has assigned a key role to bibliometric indicators for the hiring and promotion of professors. To achieve the National Scientific Habilitation (ASN), required to become associate and full professor, a candidate’s work must reach definite “bibliometric thresholds,” calculated by the governmental Agency for the Evaluation of the University and Research (ANVUR). Only if her citations, h-index, and number of journal articles exceed two thresholds out of three, is the candidate admitted to the final step, i.e. evaluation by a committee of peers.

In a paper published by PLOSONE, (which has received significant attention in Nature, Science, and Le Monde) we show that the launch of this research evaluation system triggered anomalous behavior. In particular, after the university reform, Italian researchers started to artificially increase their indicators by strategically using citations.

Given the difficulties of documenting the existence of strategic citation behaviors at a micro-level, we designed a new “inwardness indicator,” based on country self-citations. For this purpose, the countries of the authors of a citing publication are compared with those of the cited papers. A country self-citation occurs whenever a citing publication and the cited one have at least one country in common.

The inwardness indicator is defined as the ratio between the total number of country self-citations and the total number of citations of that country. We called it “inwardness” because, in general, it measures what proportion the dissemination of the knowledge produced in a country remains confined within its borders.

A country’s inwardness can be seen as the sum of the “physiological” and of the “pathological” quota of country self-citations. The physiological quota is constituted by self-citations generated by country-based researchers as a normal byproduct of their research activity. It depends both on the size of the country in terms of research production and the degree of international collaboration, such as the participation in multinational research teams. The pathological quota is constituted by the self-citations generated by strategic activities of country-based researchers, that is opportunistic self-citations and country-based citation clubs.

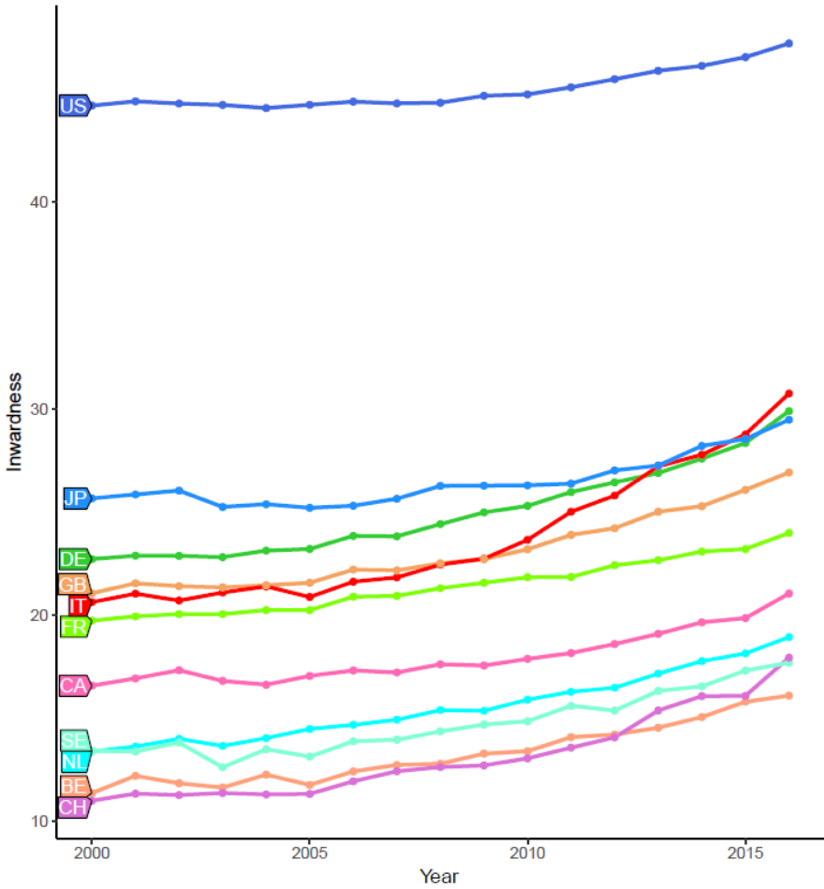

An anomalous rise in the inwardness of a country can result from an increase in either the physiological quota, or the pathological quota of self-citations. Based on these premises, we calculated the inwardness indicators of the G10 countries in the period 2000-2016. In this way, we could compare the Italian trend, not only before and after the introduction of the research evaluation system, but also against the trends of comparable countries. The data was gathered from SCIval, an Elsevier-owned platform powered by Scopus data.

Until 2009, all countries, including Italy, shared a rather similar inwardness trend. Then, the Italian trend suddenly diverged around 2010. In the period 2008-2016, the increase of the Italian inwardness amounted to 8.3 percentage points (p.p.), more than 4 p.p. above the average increase observed in G10 countries. Interestingly, we found almost the same pattern in most of the scientific areas, as defined by Scopus Categories: after the reform, Italy stands out with the highest inwardness increase in 23 out of 27 fields.

A generous explanation of this anomalous rise could be an increase in the physiological quota of country-self-citations. For example, it could reflect a sudden rise, after 2009, of the degree of international collaboration of Italian scholars. However, as a matter of fact, no particular increase in the Italian international collaboration can be spotted. Another explanation could be a narrowing of the scientific focus of Italian researchers on topics mainly investigated amongst the national community. We have no direct evidence for rejecting this hypothesis, but it appears very implausible, given the peculiarity, dimension, and, especially, the timing of the phenomenon.

The best explanation for the sudden increase in inwardness is a change in the pathological quota of the country self-citations. This means that there was a spectacular increase either in authors’ self-citations or citations exchanged within citation-clubs formed by Italian scholars. This reflects a strategy clearly aimed at boosting bibliometrics in order to reach the thresholds set by the governmental agency, ANVUR.

The beginning of the Italian anomaly is synchronous with the launch of the research evaluation system, that suddenly introduced bibliometric thresholds to the key steps of the academic career, while leaving all possible room for strategic citing. The striking result is that the effects have become visible at a national scale and in most of the scientific fields.

Our results support the idea that researchers are quickly responsive to incentives they are exposed to. They show also that the generalized adoption of incentives altered substantially the level and trend of bibliometric indicators calculated at the country level. This suggests that policy makers should exercise the greatest caution in the use of indicators for their science policy.